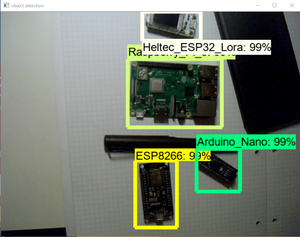

Detectron2 - Object Detection with PyTorch

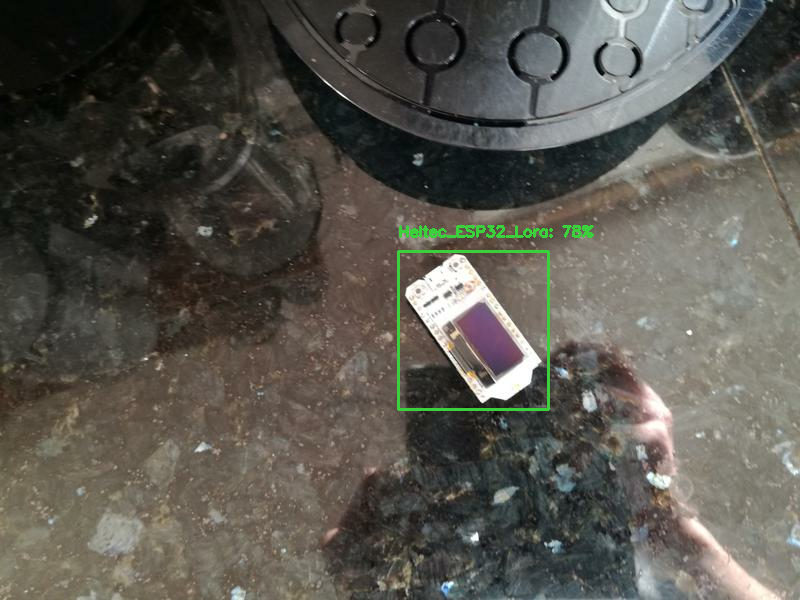

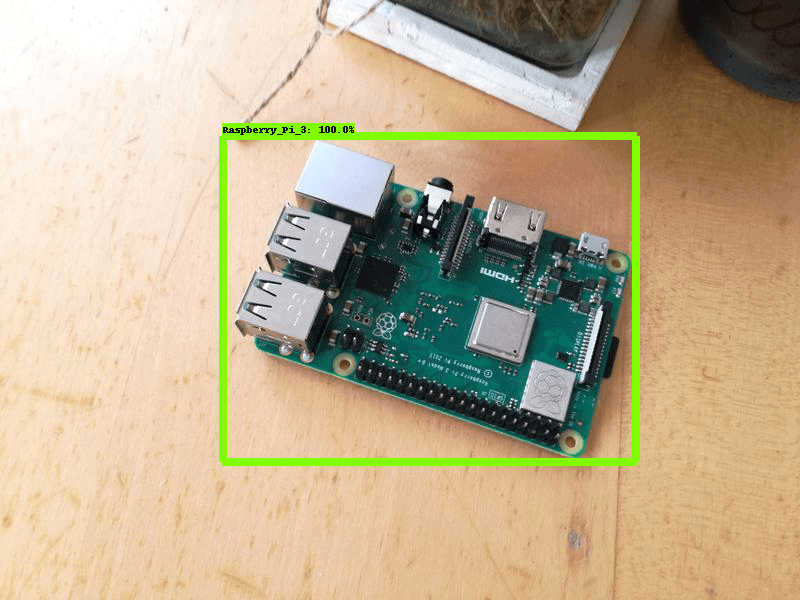

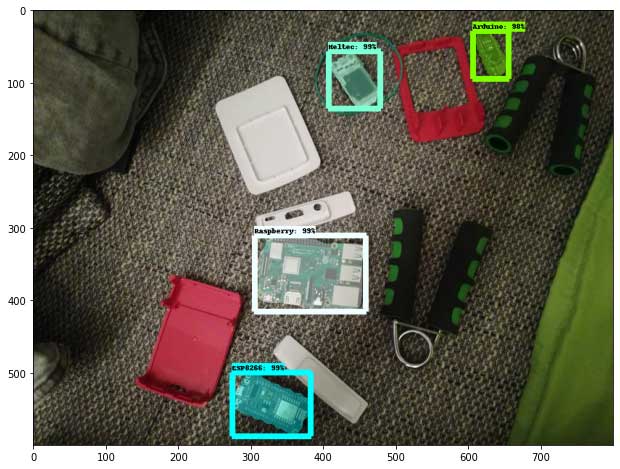

Detectron2 is Facebooks new vision library that allows us to easily us and create object detection, instance segmentation, keypoint detection and panoptic segmentation models. Learn how to use it for both inference and training.