Interpreting PyTorch models with Captum

Interpret PyTorch models with Captum.

Learn about different techniques allowing you to interpret the behaviour of your Machine Learning and Deep Learning models.

Interpret PyTorch models with Captum.

Local model interpretation is a set of techniques aimed at answering questions like: Why did the model make this specific prediction? What effect did this specific feature value have on the prediction?

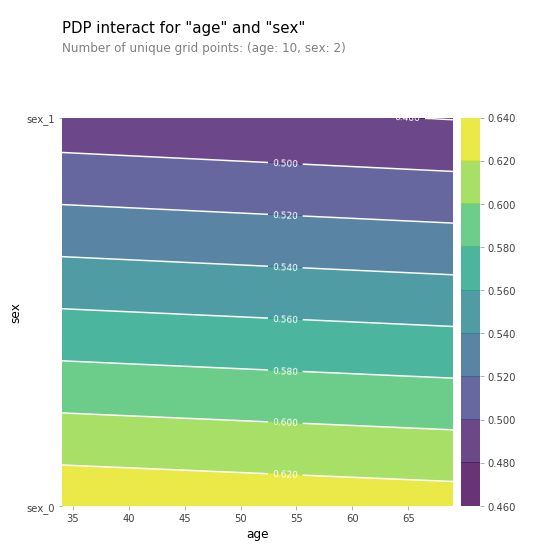

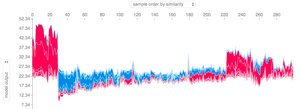

Global model interpretation is a set of techniques that helps us to answer questions like how does a model behave in general? What features drive predictions and what features are completely useless for your cause.

Regardless of what problem you are solving an interpretable model will always be preferred because both the end-user and your boss/co-workers can understand what your model is really doing.

Gilbert Tanner is a Robotics, Systems and Control student at ETH Zürich.

to get all the latest & greatest posts delivered straight to your inbox